Screencast: Zeitgeist Development – Part I

This is the first episode of my very first screencast series on “Zeitgeist Development”. It’s about writing a simple extension which helps me to analyse memory consumption of the daemon.

Let’s have a quick look at both files created as part of the extension:

mem_profiler.py

import dbus

import dbus.service

import tempfile

from meliae import scanner

from _zeitgeist.engine.extension import Extension

from _zeitgeist.engine import constants

PATH = "/org/gnome/zeitgeist/memory_profiler"

class MemoryProfiler(Extension, dbus.service.Object):

def __init__(self, engine):

Extension.__init__(self, engine)

dbus.service.Object.__init__(

self, dbus.SessionBus(), PATH

)

@dbus.service.method(constants.DBUS_INTERFACE, out_signature="s")

def MakeSnapShot(self):

filename = tempfile.mktemp(suffix=".json")

scanner.dump_all_objects(filename)

return filename

setup.py

from distutils.core import setup

setup(

name="zeitgeist-memory-profiler",

version="0.1",

data_files=(

("share/zeitgeist/extensions", ["mem_profiler.py",]),

)

)

That’s it for the first episode, next video will be on analysing the data we get from meliae.

Update: uploaded a new version, with hopefully less blur and better quality, still learning how to use youtube.

On Zeitgeist optimization

Last weekend I asked myself: “How fast is zeitgeist, and can we make zeitgeist even faster?” It turned out to be a too general question, zeitgeist has various places where performance matters, so I decided to take a first look at some very basic FindEvents queries.

To get a first impression of how fast some commonly used queries are I wrote a small benchmarking tool which on the one hand gets me some timings and also is able to produce some nice plots.

The first plot I started with gave me a first overview, the speed of these queries varies from a few milliseconds to over half a second.  But as you can see, the slower queries all have a red border around their bar, this means that we are not using our SQL indices for such queries. So my first step of this optimization story was to change the queries in a way that they are using the index they should.

But as you can see, the slower queries all have a red border around their bar, this means that we are not using our SQL indices for such queries. So my first step of this optimization story was to change the queries in a way that they are using the index they should.

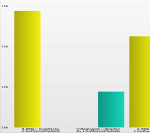

And voila, since yesterday these queries are multiple times faster, as you can see in this plot. The yellow series show the same data as the first plot, and the additional series in cyan shows how fast the same queries are after this first step of optimization – pretty impressive.

And voila, since yesterday these queries are multiple times faster, as you can see in this plot. The yellow series show the same data as the first plot, and the additional series in cyan shows how fast the same queries are after this first step of optimization – pretty impressive.

But we can do even better! Until now I exclusively looked at the class of queries where the timerange argument is “TimeRange.always()”, which is already optimized. So my next question was: “What happens if we do not query over the whole period of time, but only a random interval?”. To understand the next plot you have to know that all events in my sample activity log (which contains 50000 events) have a timestamp greater than 0 and lower than 50000, so ‘TimeRange.always()’ and the intervall ‘(1, 60000)’ will return the same result.  The plot is a bit harder to read: always a yellow and a cyan bar describe the same kind of query, using the same codebase. The only difference is that the yellow bars are using a concrete time-interval were the cyan ones are using the already optimized ‘TimeRange.always()’ statement – and remember, both types will return the same results. And as you can see, ‘TimeRange.always()’ is up to three times faster! But I already have a fix, take a look at this one, the yellow and purple bars are the same as in the last plot, and the cyan series shows the upcoming optimization which will hopefully land in zeitgeist soonish. querying on random time intervals will roughly be at the same speed than on the complete time-period.

The plot is a bit harder to read: always a yellow and a cyan bar describe the same kind of query, using the same codebase. The only difference is that the yellow bars are using a concrete time-interval were the cyan ones are using the already optimized ‘TimeRange.always()’ statement – and remember, both types will return the same results. And as you can see, ‘TimeRange.always()’ is up to three times faster! But I already have a fix, take a look at this one, the yellow and purple bars are the same as in the last plot, and the cyan series shows the upcoming optimization which will hopefully land in zeitgeist soonish. querying on random time intervals will roughly be at the same speed than on the complete time-period.

Zeitgeist 0.6 `Buzzer Beater` released!

On behalf of the Zeitgeist team I am proud to announce the release of

Zeitgeist 0.6 `Buzzer Beater`.

What is Zeitgeist?

Zeitgeist is a service which logs the users’s activities and events,

anywhere from files opened to websites

visited and conversations, and makes this information readily

available for other applications to use. It is also

able to establish relationships between items based on similarity and

usage patterns.

Where?

Website: http://zeitgeist-project.com/

Launchpad Project (with bug tracker): https://launchpad.net/zeitgeist

Wiki: http://live.gnome.org/Zeitgeist

New since 0.5.2

Engine: - Added 'zeitgeist-integrity-checker.py' tool to check the integrity of an activity log. - optimization of ZeitgeistEngine.find_related_uris() by using a different algorithm. - Improved database updates (LP: #643303, #665607) The updates scripts can now handle versions jumps (e.g from core_n to core_n+4). Database downgrades are also possible if schema version are backward-compatible. - If FindEvents-queries are run over the complete TimeRange intervall don't add timestamp conditions to the SQL statement (LP: #650930) - Improved speed of prefix-search queries by always using the index (LP: #641198) Python API: - Added a bunch of new result types: MostPopularSubjectInterpretation, MostRecentSubjectInterpretation, LeastPopularSubjectInterpretation, LeastRecentSubjectInterpretation, MostPopularMimetype, LeastPopularMimetype, MostRecentMimetype and LeastRecentMimetype. Please see the API documentation for further details (LP: #655164) Daemon: - Code-Improvements to zeitgeist-daemon (LP: #660415). - fixed `--log-level` option of zeitgeist-daemon, library code does not set the log level anymore, the application using the python library has to take care of it. Overall: - 'zeitgeist-datahub' is not part of the zeitgeist project anymore, please use the new datahub implementation written my Michal Hruby as a replacement [0] (LP: #630593). - Updates to the test suite. - Translation updates (added Asturian and Slovenian, various updates). - Added `make run` target to the rootlevel Makefile (LP: #660423) [0] https://launchpad.net/zeitgeist-datahub

Access Launchpad using resty

Today, while my daily reading of Planet Python I found out about resty, a wrapper around curl to simplify accessing Restful webservices.

It’s as easy as

markus@thekorn:/tmp$ . resty

markus@thekorn:/tmp$ resty https://api.edge.launchpad.net/1.0/

https://api.edge.launchpad.net/1.0/*

markus@thekorn:/tmp$ GET /bugs/123456 -H "Accept: application/json" |\

python -c "import sys, json; print json.load(sys.stdin)[sys.argv[1]]" description

Binary package hint: amarok

One of the podcasts at http://www.touchmusic.org.uk/TouchPod/podcast.xml crashes amarok. The podcast in question is Touch Radio 25. The other episodes I have tried seem to work fine. What happens with #25 is that I get a few hundred ms of sound and then amarok freezes for a little while before evaporating from the desktop altogether.

I don't know whether or not the audio file in question is corrupt, but obviously amarok shouldn't crash even if it is.

markus@thekorn:/tmp$

Very cool!